OpenStack, Puppet used to build cloud for world's largest particle accelerator.

CERN data center equipment in Geneva.

That's why CERN, the European Organization for Nuclear Research, opened a new data center and is building a cloud network for scientists conducting experiments using data from the Large Hadron Collider at the Franco-Swiss border.

CERN's pre-existing data center in Geneva, Switzerland, is limited to about 3.5 megawatts of power. "We can't get any more electricity onto the site because the CERN accelerator itself needs about 120 megawatts," Tim Bell, CERN's infrastructure manager, told Ars.

The solution was to open an additional data center in Budapest, Hungary, which has another 2.7 megawatts of power. The data center came online in January and has about 700 "white box" servers to start with. Eventually, the Budapest site will have at least 5,000 servers in addition to 11,000 servers in Geneva. By 2015, Bell expects to have about 150,000 virtual machines running on those 16,000 physical servers.

But the extra computing power and megawatts of electricity aren't as important as how CERN will use its new capacity. CERN plans to move just about everything onto OpenStack, an open source platform for creating infrastructure-as-a-service cloud networks similar to the Amazon Elastic Compute Cloud.

Automating the data center

OpenStack pools compute, storage, and networking equipment together, allowing all of a data center's resources to be managed and provisioned from a single point. Scientists will be able to request whatever amount of CPU, memory, and storage space they need. They will also be able to get a virtual machine with the requested amounts within 15 minutes. CERN runs OpenStack on top of Scientific Linux and uses it in combination with Puppet IT automation software.

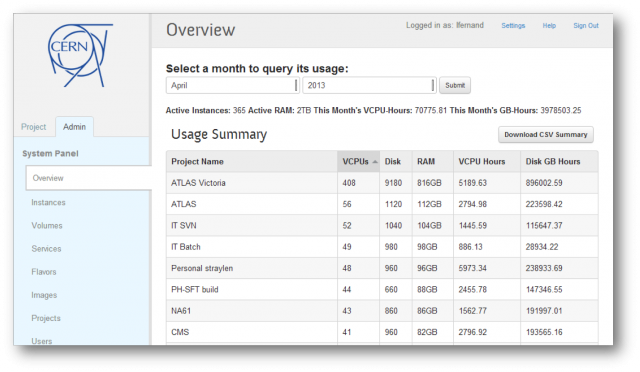

Enlarge / CERN dashboard for managing OpenStack resources.

CERN

CERN is deploying the OpenStack cloud this month, and it has already been in use by pilot testers with 250 new VMs being created each day. By 2015 Bell hopes to have all of CERN's computing resources running on OpenStack. About 90 percent of servers would be virtualized but even the remaining 10 percent would be managed with OpenStack.

"For the remaining 10 percent we're looking at using OpenStack to manage the bare metal provisioning," Bell said. "To have a single overriding orchestration layer helps us greatly when we're looking at accounting, because we're able to tell which projects are using which resources in a single place rather than having islands of resources."

CERN began virtualizing servers with the KVM and Hyper-V hypervisors a few years ago, deploying Linux on KVM and Windows on Hyper-V. Virtualization improved things over the old days when scientists would have to wait months to procure a new physical server if they needed an unusual configuration. But even with virtualization and Microsoft's System Center Virtual Machine Manager software, things were somewhat limited.

Scientists had a Web interface to request virtual machines, but "we were only offering four different configurations," Bell said. With OpenStack, scientists will be able to ask for whatever combination of resources they'd like, and they can upload their own operating system images to the cloud-based virtual machines.

"We provide standard Windows and Scientific Linux images," Bell said. Those OS images are pre-loaded on cloud VMs and supported by the IT department. Scientists can load other operating systems, but they do so without official support from the IT department.

Moreover, OpenStack will make it easier to scale up to those aforementioned 150,000 virtual machines and manage them all.

CERN relies on three OpenStack programs. These are Nova, which builds the infrastructure-as-a-service system while providing fault-tolerance and API compatibility with Amazon EC2 and other clouds; Keystone, an identity and authentication service; and Glance, designed for "discovering, registering, and retrieving virtual machine images."

CERN uses Puppet to configure virtual machines and the aforementioned components. "This allows easy configuration of new instances such as Web servers using standard recipes from Puppet Forge," a repository of modules built by the Puppet open source community, Bell said.

There is still some manual work each time a virtual machine is allocated, but CERN plans to further automate that process later on using OpenStack's Heat orchestration software. All of this will help in deploying different operating system images to different people and help CERN upgrade users from Scientific Linux 5 to Scientific Linux 6. (Scientific Linux is built from the Red Hat Enterprise Linux source code and tailored to scientific users.)

"In the past we would have to reinstall all of our physical machines with the right version of the operating system, whereas now we're able to dynamically provision the operating system that people want as they ask for it," Bell said.

Keeping some things separate from OpenStack

CERN's OpenStack deployment required some customization to interface with a legacy network management system and to work with CERN's Active Directory setup, which has directory information on 44,000 users. Although CERN intends to put all of its servers under OpenStack there are other parts of the computing infrastructure that will remain separate.CERN has block storage set aside to go along with OpenStack virtual machine instances, but the bulk storage where it has most of its physics data is managed by a CERN-built system called Castor. This includes 95 petabytes of data on tape for long-term storage, in addition to disk storage.

CERN experiments produce a petabyte of data a second, which is filtered down to about 25GB per second to make it more manageable. (About 6,000 servers are used to perform this filtering—although these servers are in addition to those managed by the IT department, they too will be devoted to OpenStack during the two years in which the Large Hadron Collider is shut down for upgrades.)

CERN is sticking with Castor instead of moving to OpenStack's storage management system. "We are conservative in this area due to the large volumes and efforts to migrate to a new technology," Bell noted. The migration is smoother on the compute side, since OpenStack builds on existing virtualization technology. Bell said CERN's OpenStack cloud is being deployed by just three IT employees.

"Having set up the basic configuration templates [with Puppet], we're now able to deploy hypervisors at a fairly fast rate. I think our record is about 100 hypervisors in a week," Bell said. "Finding a solution that would run at that scale [150,000 virtual machines] was something a few years ago we hadn't conceived of, until OpenStack came along."