The NIST Definition of Cloud Computing

Why I like IBM CloudBurst

Looking at IBM CloudBurst, there are the thoughts that came to mind...

• IBM presents a pre-integrated solution consisting of hardware (rack, servers, storage, network), virtualization and managing software, including contents (Web Sphere appliance being an option), plus quick start services.

• For freedom of choice, IBM has two flavors of servers: x Series (i.e. x86) and POWER (RISC), plus the ability to manage z/Linux on mainframes.

• IBM also provides integrated billing and nice end user portal.

• With a simple ordering and pricing models. For instance, IBM managing software is based on processor cores, so the more virtual machines you have the better.

Don’t trust me? Please read their documentation

(http://www-01.ibm.com/software/tivoli/products/cloudburst/)

or watch their Cloudburst Demo in SWF here:

http://www-03.ibm.com/systems/data/flash/systemx_and_bladecenter/Cloudburst/CloudBurst1_2_techDemo_controller.swf

Amazon's trouble raises cloud computing doubts

SAN FRANCISCO: The black out at Amazon's EC2 (Elastic Cloud Computing) data centre has cast a shadow over cloud computing, which has been hailed as a sturdy, reliable and inexpensive storage and network solution, especially for small and medium enterprises (SMEs) that cannot afford their own large servers.

On the early morning of April 21 (Pacific Day Time), Amazon's EC2 data centre in Virginia crashed, taking down with it several popular websites and small businesses that depend on it. These included favoured social networking destinations like Evite, Quora, Reddit and Foursquare, among others. Now, the question is being asked: if an Amazonian cloud giant can crash so badly, what about the rest? Is cloud computing as reliable as we thought?

"People will now realise that cloud isn't magic like they earlier thought it was," says Lydia Leong, research vice-president and cloud computing expert at technology research and advisory firm Gartner. "They will now realise that cloud is merely about viability and not about continuous availability."

But that's exactly the kind of marketing pitch that sold cloud computing to many small businesses, including the ever-increasing social networking bandwagon. SMEs are now graduating to the next level of cloud computing, using it not just for storage, but also for active computing purposes like communication, sustaining remote workforces and deploying cloud services like remote IT help, cloud operating systems, and so on. The impact of such an outage, therefore, is felt even more.

Online businesses affected by the EC2 outage lost that many hours of ad revenues, business opportunities and drops of the precious trust of many loyal followers, a primary pillar of social networking. The losses are hard to quantify.

"Since Amazon isn't giving a customer list, we can only guess. From what we know, it's probably in millions of dollars," says computer scientist David Alan Grier of the eminent Institute of Electrical and Electronics Engineers ( IEEE) Society and author of When Computers were Human and Too Soon to Tell: Essays for the End of the Computer Revolution. "The biggest problem, though, could be the loss of confidence in cloud computing. We still don't know why the Virginia data centre failed."

The EC2 holds incredibly valuable data of Amazon's cloud client companies. And yet, Amazon's Virginia centre is, according to sources, remarkably open and vulnerable, located in an ordinary industrial building near Dulles Airport.

Although Amazon will probably recover quickly, the event has damaged its credibility. Time will tell how badly. "If Amazon can explain the problem and make a good case for why the damage may not be big, then it will be fine," says Grier. "If not, the work will go elsewhere. Amazon may be a big player, but there are other big players waiting to step into the game." These include the likes of Google, IBM, Cisco, RedHat and Microsoft (whose cloud ads are all over Silicon Valley), to name a few.

"Amazon's cloud competitors are likely to use this outage as a marketing tool. But it could have happened to anybody," says an ex-HP cloud veteran who currently works at one of Silicon Valley's most promising cloud start-ups, which recently got acquired.

There's an important lesson to be learnt for cloud users from this incident: diversification. "As a business, it makes sense to not depend on a single cloud provider or a single data centre alone," says the cloud veteran. "You would not put all your eggs in one basket, would you?" His company's major product launch almost got jeopardised due to the EC2 outage, but didn't suffer heavily because it is distributed over several cloud providers and data centres.

For the same reason, two start-ups of Nicolai Wadstrom, the serial entrepreneur and investor, didn't crash either. His social Internet start-up Urban Metrics as well as an Internet programmers' community deploy Amazon's EC2, but also their own dedicated servers. "After this crash, we will think more about redundancy," says Wadstrom. "That involves spreading servers on multiple availability zones and perhaps on different providers."

The message for cloud computing users, thus, could not have been clearer. They need to consider their own servers for certain operations and use multiple cloud providers and be able to seamlessly move between them. In true Silicon Valley style, this new need will probably give birth to another crop of start-ups. ( Witsbits, one of Wadstrom's new start-ups, already offers a technology to facilitate this seamless integration.)

The cloud veteran says the whole premise of cloud computing is throw-away cheap computing, storage and network resources. You just need to know how to use it," he says. "Most small businesses do not know how to build clustered or distributed solutions. So, in a way, Amazon can, and ought to, beef up its solutions so that the user is less affected by such an outage."

SaaS will dominate your cloud strategy

By Phil Wainewright

If an enterprise is focusing on the infrastructure layer to build its cloud strategy, then it’s looking in the wrong direction. SaaS will dominate spending on cloud into 2020 and beyond, according to Forrester projections.

The chart in Larry Dignan’s blog post end of last week reporting Forrester’s projection for a $241 billion cloud computing market by 2020 clearly shows the relative market shares for SaaS, PaaS and IaaS. It’s a graphic reminder that, if an enterprise is focusing on the infrastructure layer to build its cloud strategy, then it’s looking in the wrong direction.

Forrester projects that a massive 80% of spending on public cloud IT next year will be spent on SaaS. A far smaller 11.5% share will be IaaS, and while PaaS is growing fast, that is from a low base and it is set to remain a small proportion of total cloud expenditure relative to SaaS. Even taking private cloud spend into account, public SaaS still holds above 50% of the total market, right through to 2020 and beyond.

There are several reasons why SaaS spending is high and will continue to strengthen.

- SaaS is ready-to-run with next-to-no set-up expenditure — barring initial data import, integration to existing IT assets and implementation of company-specific policies and processes (all of which equally apply with any other layer of cloud). This ease and low cost of implementation make it highly appealing for replacing legacy applications or meeting new requirements without incurring heavy budget commitments.

- The majority of cloud applications are peripheral to an enterprise’s core operations. SaaS has its deepest traction in functions such as salesforce automation, collaboration, people management, e-commerce, content management and customer service. These are still vitally important functions, but there’s less argument around losing control of proprietary business processes and more likelihood of efficiency gains from adopting popular best practice. So the case for adoption is easier to argue.

- SaaS is a low-risk on-ramp to wider cloud adoption. It’s how most enterprises first encounter the public cloud, and once SaaS has been accepted internally, it tends to stay in place as other cloud assets are added.

- An important economic factor in play here is that SaaS vendors are better able to deliver business value because their applications encompass a complete set of functionality that the vendor continues to evolve and enhance. This keeps SaaS less exposed to the commoditization that lower levels of the cloud stack will experience — in particular IaaS.

The other reason SaaS dominates is one that I’ll return to later this week as I complete this series of interlinked posts discussing enterprise cloud strategy. It’s to do with economics and the fact that SaaS providers can leverage multi-tenancy top-to-bottom throughout the cloud stack, while achieving economies of scale that allow them to deliver enterprise-class availability and performance across a diverse customer base. In a nutshell, SaaS vendors are simply able to deliver business applications far more cost-effectively than home-grown PaaS or IaaS implementations.

source: Software as Services

VMware Acquires Online Presentation Application SlideRocket

VMware has just announced the acquisition of online presentation application SlideRocket. Terms of the acquisition were not disclosed.

VMware has just announced the acquisition of online presentation application SlideRocket. Terms of the acquisition were not disclosed.

SlideRocket, which has raised $7 million in funding, is a cloud-based online presentation application that produces slideshows that rival PowerPoint. More than 20,000 customers and 300,000 users leverage Slide Rocket to more effectively build, deliver and share presentations.

Slide Rocket integrates authoring, asset management, delivery and analytics tools in a single hosted environment that allows you to quickly create presentations, store, tag and search your assets, collaborate with your colleagues, securely share your slides in person or remotely and measure the results. The site also supports web-based conferencing that allows users on different computers to view the same presentation simultaneously and includes a marketplace, an “iTunes For Presentations,” that allows users to purchase assets for their projects

For VMware, the acquisition of SlideRocket is an investment in bringing business software to the cloud.

Brian Byun, VP and general manager at VMware said in a release: VMware is at the forefront of building the core cloud computing infrastructure and enables users to gain ever greater value from cloud-based solutions. SlideRocket’s cloud-based architecture, innovative design, and strong integration with other cloud applications and services have transformed a decades-old business productivity software and redefined the way we consume and communicate information.

Th isn’t VMware’s first purchase of a cloud startup. The company bought cloud based authentication system TriCipher and IT performance analytics company Integrien last August.

One Company's account of surviving AWS failure

tl;dr: Amazon had a major outage last week, which took down some popular websites. Despite using a lot of Amazon services, SmugMug didn’t go down because we spread across availability zones and designed for failure to begin with, among other things.

We’ve known for quite some time that SkyNet was going to achieve sentience and attack us on April 21st, 2011. What we didn’t know is that Amazon’s Web Services platform (AWS) was going to be their first target, and that the attack would render many popular websites inoperable while Amazon battled the Terminators.

Sorry about that, that was probably our fault for deploying SkyNet there in the first place.

We’ve been getting a lot of questions about how we survived (SmugMug was minimally impacted, and all major services remained online during the AWS outage) and what we think of the whole situation. So here goes.

Read the rest on smugmug.com

Security and Data Protection in a Google Data Center

IBM Launches Wave of Cloud Computing Services for the Enterprise

Jean S. Bozman

As the momentum toward building private clouds and hybrid clouds accelerates, IBM is positioning its technology stack, and bringing new service offerings to market, to capitalize on that change.

On April 7, 2011, IBM held an IBM Cloud Forum in San Francisco to discuss the trends in cloud adoption – and to introduce new products and services. Its primary target: private clouds, which will build on IBM servers and storage, IBM middleware and IBM services to help build these private clouds to support business processes. IBM believes that a range of cloud services is being built by their customers, in part to tap the services of the customers' business partners – and that the construction time for those clouds could be reduced by providing pre-tested solutions that support business workloads.

At the forum, IBM executives made clear that the first wave of cloud computing, was aimed more at application development and shared computing services for test/development and collaboration, and was often delivered via public cloud services.

The next wave, leveraging cloud computing technologies to support business services, is well underway, they said, as evidenced by IBM projects with customers to build private clouds, customers who are using public clouds to access specific services — and also hybrid clouds, which combine private cloud and public cloud capabilities.

Adding to the custom-built services it already provides to its longtime customers, IBM is announcing that it is opening up new options, by offering pre-built templates, components and services that allow customers to quickly assemble, and use, a private cloud. At the Cloud Forum, IBM introduced a number of services, ranging from the IBM SmartCloud Enterprise offering for public cloud providers to the technology for shared clouds and private clouds with IBM SmartCloud Enterprise+.

IBM is Focusing on Enterprise-Ready Clouds

Steve Mills, senior vice president and group executive of IBM's Software and Systems Group, introduced the Cloud Forum day, speaking about Business Processing as a Service (BPaaS), in addition to the more widely known Software as a Service (SaaS), Platform as a Service (PaaS) and Infrastructure as a Service (IaaS).

BPaaS brings the ability to leverage off-premise infrastructure to support business services, Mills said: "By accessing business services via the Web, [you can use] multi-tenant, shared infrastructure without the need to manage or control the underlying resources." But, in many cases, Mills and other IBM executives said, customer concerns about availability and security have prevented customers from deploying business applications onto cloud infrastructure.

Now, IBM is prepared to work with customers or service providers to build out such systems — and to directly supply these enterprise-level cloud services to businesses, as a form of cost-avoidance for building in-house IT infrastructure for specific workloads. IBM estimates that cloud computing will be a $7 billion business for IBM in 2015, with about $3 billion in incremental revenue, from new opportunities related to cloud computing.

Mills explained this focus on enterprise clouds further at IBM's Impact 2011 conference in Las Vegas the week of April 11: "If I'm running a business, I no longer have to think purely about whether I'm going to build or buy an application," he said. "From now on, I can actually call on somebody else to execute that element of my business." Examples include salesforce automation, accounting payroll, tax remittance and supply chain functions. "So, as you think about cloud, think about SOA really extended to a federated environment. It's not just the integrations you're doing within your business," he said. "It’s how you're integrating into other businesses. How you're tapping into their services and their capacity to complete your business processes." For that, he said, you need "BPM (business process management), choreography and strong connectivity, along with the ability to manage those service connections to ensure privacy and security."

Cloud Forum Announcements

New services and products announced at the IBM Cloud Forum include:

- IBM SmartCloud offerings -- Enterprise and Enterprise+ (read Enterprise Plus). These are cloud services, delivered by IBM via more than 10 IBM cloud-computing centers worldwide (in the U.S., China and in multiple locations in Europe and Africa). It includes automation and rapid provisioning that IBM said could reduce costs by as much as 30% compared with traditional IT development environments.

- The Enterprise service, which is available immediately, is built on IBM's Development and Test Cloud, which is already in place. It is designed to reduce app dev and test/dev costs for IT customers. It will supply availability levels at 99.5% uptime, and will support application development for Microsoft Windows and Linux operating systems. Some customers are also accessing IBM cloud services to run Monte Carlo simulations, and other burst-driven workloads. It will be available on a pay-as-you-go basis, at hourly rates.

- The Enterprise Plus (Enterprise+) service, which is a pilot program now in use at a small number of client sites worldwide, will be released later this year, and is being positioned by IBM as a complement to the Enterprise service. It will provide what IBM calls "a core of multi-tenant services to manage virtual server, storage network and security infrastructure." Enterprise Plus is intended to support private cloud services, with high levels of security and availability. It is designed to support workload isolation; availability levels of 99.9% uptime or more; built-in support for systems management and deployment; and payment/billing functionality. It will target such workloads as analytics, ERP and logistics for Windows, Linux or IBM AIX Unix environments. It will be priced on a monthly usage basis, at prices agreed to by fixed contracts.

- IBM SAP Managed Application Services, an offering that will run on the IBM SmartCloud and will become available later this year. Focused on automating labor-intensive tasks, such as SAP cloning, refreshes and patching, these IBM services will build the services catalog for SAP applications. It will automate the provisioning of SAP environments.

- IBM Lotus Domino applications on the IBM SmartCloud.This will include support for the Lotus Domino Utility Server for LotusLive, for integrated email, social media for business, and access to third-party cloud applications. IBM said this will support a new server licensing model, but did not provide details.

IDC Analysis

IDC believes that IBM is aiming to step up its emphasis on the importance of security, availability and reliability for cloud computing – all of which speak to IBM's long-term focus on Business Processing. The reason for this focus on business processing is clear: It plays to IBM's historic strengths in the mainframe and Unix server platform markets. It gives IBM the opportunity to leverage its intellectual property (IP) that supports RAS (reliability, availability, serviceability) and security – and to apply this IP to a new, and rapidly growing, market space. At the same time, IBM needs to make clear that its cloud-enabling technology is addressing all of the server platforms in cloud computing, much of which is built on x86 servers and x86 server virtualization, in addition to mainframes and Unix servers. IBM's cloud services will be delivered on a mix of platforms, as they are in IBM customer sites.

Erich Clementi, senior vice president, IBM Global Technology Services, said, in a printed statement, that IBM's SmartCloud provided "the cost savings and scalability of a shared cloud environment plus the security, enterprise capabilities and support services of a private environment.” IBM has developed best practices that will be leveraged into a set of cloud deployment models—and offered as services, based on what Clementi described as thousands of cloud computing service engagements involving IBM.

It is an astute move to provide cloud services directly, as well as to provide the components of cloud computing to those customers that are building out their own private cloud infrastructure. IBM can play a role in all of those paths to market – as a provider of hardware and software; a partner in large cloud computing projects; working with channel partners to deliver products and services to IT organizations – and providing cloud computing services directly to businesses, on a global basis. With regard to that last role, IBM spent the last few years putting a worldwide cloud computing infrastructure is already in place. At the Cloud Forum event, several customers spoke about their deployments based on IBM technology, including Macys.com and Univar.

Leveraging its strong positions in the software and services businesses, IBM is well-positioned to deliver managed services on its own cloud computing infrastructure. In addition to the full portfolio of IBM servers – IBM System x x86 servers, IBM Power Systems running IBM AIX Unix and Linux, and IBM System z mainframes – IBM has the WebSphere platform for app-serving and collaborative workloads and IBM Tivoli system management products for provisioning and managing virtual servers supporting business services on SmartCloud.

Challenges and Opportunities

However, IBM will likely find competition in this space, over time, primarily from HP, with its HP CloudSystem offerings, and also with its integrated systems initiative, for which is actively partnering with Microsoft on providing full technology stacks to support end-to-end business workloads.

HP has the capacity to build out directly offered cloud services, on its own, without partnering with others. But Microsoft has already built out its Azure platform in multiple, large cloud-enabled datacenters located around the world – in keeping with the governmental restrictions of geographic regions or countries, and with security and availability built in. Although Azure has been built out more as a PaaS offering, it is also clear that, over time, business services could be added to that platform. And, given the close cooperation between HP and Microsoft in building end-to-end workloads on combined infrastructure, IBM could eventually see competition from both HP and Microsoft working together.

Other forms of competition could emerge, if providers of cloud infrastructure, like Dell or Cisco, partner with large services providers, inclusive of large telecommunications companies. In addition, Oracle Corp., which initially did not push cloud computing, raised the profile of its cloud offerings in 2010, has the technologies, applications and middleware that would allow it to provide end-to-end managed, cloud-enabled business services by itself, or it could partner with other companies to deploy those end-to-end services across geographic regions.

However, the opportunity to be an early mover in the enterprise cloud services space is likely what informed IBM's earlier investment cycle. IBM's earlier investments in Asia/Pacific — including the build-out of two cloud-computing centers in China — and in Europe and in Africa, where cloud computing is seen as a way to leverage off-premises IT services where computing infrastructure is scarce, will pay off as a new wave of enterprise-focused cloud computing deployment takes hold. The combination of services and software will build on this earlier deployment of cloud computing infrastructure.

Conclusion

IBM's move into enterprise-focused cloud computing is a natural step for the company to take – and it has a time-to-market advantage over many of its competitors, given its deep investments in system management software and business services. Further, it leverages IBM's products and services in a new way that is likely to attract net-new business. However, as it moves forward with its private cloud offerings, IBM must take care to be clear in its communications with customers, so that they can fully understand its intentions and its roadmap in this new, emerging, market space.

source: idc.com

Amazon failure takes down sites across Internet

HP Launches Private Cloud for Test Service

Key features

- Automated test process resulting in cost saving in

time to execute tests - Integrated service monitoring across testing and

operations - Improved consistency between development and

test environments - Improved utilization of test environment

Overview

The HP Private Cloud for Test Service is comprehensive solution for implementing on-site private cloud capability to support the complete testing lifecycle. Made up of software, consulting, and best practices, it addresses common problems like slow time to market, costly test environments, and the business risk of defects deployed in operations. And it is the foundation and guidance for building a dynamic, scalable test environment. Integrated and automated from development through to operations.More details on HP Site

Cloud Computing's Tipping Point

Half of federal agencies will be in the cloud within 12 months, according to our new cloud computing survey.

By Michael Biddick

Private clouds promise maximum control and strong security, while commercial cloud services are fast and flexible. Which works best? Government agencies are adopting both, as well as hybrids. The private cloud vs. public cloud debate is rapidly giving way to new models where agencies tap on-demand IT resources from a variety of cloud platforms -- private, commercial, hybrid, software as a service -- based on what best suits their needs.

There are few technology trends the U.S. government is embracing with such fervor as the cloud. In his Federal Cloud Computing Strategy report, published in February, federal CIO Vivek Kundra set a target of shifting 25% of the government's $80 billion in annual IT spending to cloud computing.

How fast will federal agencies make the transition? InformationWeek Government and InformationWeek Analytics surveyed 137 federal IT pros in February to gauge their plans. Our 2011 Federal Government Cloud Computing Survey shows a big jump in the use of cloud services, with 29% of respondents saying their agencies are using cloud services, up 10 points from last year. Another 29% plan to begin using the cloud within 12 months, which means adoption should surpass the 50% mark in the year ahead.

As federal IT teams evaluate where cloud computing fits in their broad IT strategies, they must answer some fundamental questions: Where will cloud services deliver savings over existing systems? How should they provision and manage cloud services? And the big one on everyone's mind, what about data security?

The Obama administration's "cloud first" policy requires agencies to use cloud services where possible for new IT requirements. Cloud computing is more than a new technology services approach; it demands changes to deep-rooted procurement processes and organizational culture. It's also an alternative to capital investment in systems and software, as agencies look to eliminate 800 data centers over the next four years in accordance with the Federal Data Center Consolidation Initiative.

The Office of Management and Budget's influence is shown in our survey, with 21% of respondents saying that compliance with OMB guidance is a driver in their shift to cloud computing.

The economies of scale from shared, centralized infrastructure have the potential to lower usage costs across government. In a pure utility model, users pay only for what they consume, but that doesn't translate to federal IT yet. However, with the prospect of decreasing budgets, agencies must find ways to direct limited funds to their core missions, which may mean having less money available for IT investments. Cloud computing could very well be part of how they cope.

Federal IT pros are clearly looking for savings in the cloud. In our survey, lowering IT costs is the No. 1 business driver of cloud computing, mentioned by 62% of respondents.

Private Clouds For Hire

Agencies face many challenges in moving to the cloud. Top among them is assuring the security of systems and data, identified by 77% of respondents. To address that concern, vendors are offering private clouds with tighter controls over the geographic location of data storage and other aspects of security.

The downside is that all of this comes at a price. The more unique a cloud environment, the harder it is to leverage the scale of the cloud model and, with that, realize the cost savings made possible by a wide user base and cheap resources. It's important that agencies perform their own cost assessments because private clouds and public clouds aren't always the least expensive choices. In general, private clouds don't offer the same savings as public clouds.

The Department of Defense and some intelligence agencies have launched data center improvement initiatives under the private cloud moniker. These efforts seek to employ service catalogs and orchestration technology for configuring and provisioning IT resources. While such initiatives will make data centers more efficient and have other value, it has been hard to demonstrate return on investment that's anywhere near what's possible with commercial cloud services. Private clouds often require a significant up-front investment in equipment, and the complexities of managing IT capacity remain, so the potential for long-term operational savings is difficult to establish.

Making SaaS Work

In the commercial market, SaaS gives companies enterprise-class capabilities without the ownership capital costs of self-managed applications. But once hooked, businesses may get socked with usage fees that, over time, exceed the cost of conventional software deployments. The trade-off may be worth it, however, since they're conserving up-front capital and not building IT empires in support of on-premises software.

In federal government, many CIOs are dealing with established software systems that represent substantial investments. Furthermore, technical challenges around integration and storage and legal requirements related to where data resides are potential barriers to SaaS. Storing information in the cloud will require "a technical mechanism" to achieve compliance with the records management laws and policies of the National Archives and Records Administration and the General Services Administration, according to the Federal Cloud Computing Strategy report.

Uncle Sam's SaaS portal, Apps.gov, has experienced only limited uptake by agencies, but Apps.gov isn't the only option. Agencies can procure SaaS through requests for proposals or piggyback on existing contract vehicles. The problem becomes the length of the certification and accreditation process required to ensure that a SaaS app satisfies an agency's security policies. A drawn-out procurement and C&A effort can negate any savings SaaS might provide.

Private clouds are the preferred model in government, with 46% of respondents already using or highly likely to use private clouds. But agencies are also looking to plug into cloud services outside of their data centers, and 27% say it's highly likely they will do that through a government portal such as Apps.gov.

What about commercial cloud services from vendors such as Amazon, Google and Microsoft? Going forward, federal IT pros are twice as likely to subscribe to those services when the services have been specifically adapted for government customers, such as Google's Government Cloud. In our survey, 11% are highly likely to adopt commercial cloud services; that jumps to 22% for commercial clouds adapted for government.

How FedRAMP Helps

To reduce barriers to entry, the federal CIO Council has established the Federal Risk and Authorization Management Program, or FedRAMP, which is intended to bring a standard approach to assessing and authorizing cloud services and products. The goal, according to Kundra, is to "allow joint authorizations and continuous security monitoring services for government and commercial cloud computing systems intended for multiagency use."

In theory, FedRAMP will lead to a common security risk model that can be leveraged across agencies. In reality, however, the program will get agencies only part of the way through that process. In our survey, 44% of respondents are unfamiliar with the FedRAMP program, and 26% have conducted their own C&A instead of taking advantage of it. A lot more work is needed to get more vendors through the program and to promote it within agencies.

Another new program, Standards Acceleration to Jumpstart Adoption of Cloud Computing, led by the National Institute of Standards and Technology, also has had limited impact. In our survey, 53% of federal IT pros haven't heard of the initiative, and only 5% find it very helpful.

As part of the SAJACC program, NIST published 25 use cases to help federal IT pros assess cloud-based offerings. Examples include "cloud bursting" from data centers to cloud services to meet spikes in demand, and migrating a queuing-based application to the cloud. While SAJACC may spur ideas, there isn't a lot of actionable information in the case studies to guide selection and adoption of cloud computing.

Real-World Scenarios

Agencies are looking for ways to take advantage of cloud computing, while maintaining data security and protection, and new initiatives take many forms. Here are a few examples of how agencies are getting started.

>> The U.S. Patent and Trademark Office's financial and acquisition system, dubbed Momentum, has one production environment and four test environments, comprising 25 servers with 10 integrations. The production database, including the failover database, is 2 TB, while the test database is 4 TB. (All are Oracle.) The test database is production-sized and refreshed with scrubbed production data periodically. USPTO is assessing the feasibility of moving Momentum to a cloud environment.

>> The Air Force Research Laboratory's Information Directorate is exploring how cloud technology might be used for cybersecurity mission assurance. Its goal is to see if cloud computing can increase the availability and redundancy of continuous operations.

>> The Department of Education is planning to issue an RFP for the operation and maintenance of its Migrant Student Information Exchange, and it's interested in cloud computing as a potential way of providing those capabilities. MSIX, implemented in 2007, contains records for 97% of the migrant student population, with data from 41 states.

>> The Department of Transportation, Federal Aviation Administration, and Air Traffic Organization may explore cloud computing in a test program. This program would provide a virtual production environment that simulates ATO's production environment, which is spread across a number of facilities. The virtual environment would be used to provide email service and to develop and test software.

Private Cloud Considerations

The easier it is for users to request services from a private cloud, the more complex the back-end processes have to be. From the service request to provisioning and managing the service, a mature process environment is required if you’re going to automate those tasks and achieve the benefits of rapid, on-demand provisioning and, ultimately, cost savings.

Unfortunately, the best server provisioning, orchestration, and management tools won’t compensate for a lack of well-conceived processes. While the ITIL has been a rallying cry for IT process improvement, many IT organizations aren’t at the level of maturity required for private clouds.

The ability to manage capacity is important in private clouds, though many traditional software tools don’t adapt well to that job. Monitoring application performance, faults, and security in a private cloud often requires a different set of tools. So far, the options that have sprung up in the market are geared toward virtualized data centers. IT organizations must determine how to integrate new cloud-aware tools with their enterprise management platforms to get a complete picture of their computing environments.

Our data shows agencies are very interested in rapidly expanding into the cloud, driven by cost savings, an ability to accelerate delivery of IT services and infrastructure, and the prospect of tight IT budgets and fewer data centers. But the transition presents challenges around security, systems integration, governance, and more.

To maximize the benefits, federal IT teams must enter the cloud with well-conceived business and deployment plans and a readiness to adjust along the way.

Cloud changing face of data centers

Summary

Cloud computing will be one of the major forces shaping next-generation data centers, which look set to become smaller and more agile to meet evolving enterprise needs, say market watchers.Cloud computing is one of the forces shaping data centers over the next five years, note market observers who also share their vision of next-generation data centers.

In a statement released last month, Gartner highlighted cloud computing as one of the four factors that will change datacenter space requirements in the next five years. "Datacenter managers are beginning to consider the possibility of shifting nonessential workloads to a cloud provider, freeing up much-needed floor space, power and cooling, which can then be focused on more-critical production workloads, and extending the useful life of the data center," said the research firm.

With the shift to offloading nonessential services to a cloud provider, the corporate datacenter landscape will change, according to Gartner. In fact, the research firm predicted that by 2018, datacenter space requirements will shrink to only 40 percent of what is required today as data centers focus on core business services. While these core business services will demand more IT resources, Garter noted that the shrinking size of server, storage and networking equipment will also contribute to the reduction in datacenter space.

Aside from turning to cloud services as a strategic decision, cloud computing is also helping to deliver energy efficiency to data centers, said Alex Tay, datacenter services executive at IBM Asia-Pacific. This, he explained in an e-mail interview, is a result of an increase in server utilization rates brought about by virtualization and consolidation of servers.

Flexibility, immediacy hallmark of next-gen data centers

According to the Gartner report, besides cloud computing, datacenter managers also need to focus three aspects when designing their future leading edge facilities. They are smarter datacenter designs, green IT pressures as well as solving density issues.

David Cappuccio, managing vice president and chief of research for infrastructure at Gartner, noted in the statement that data centers with traditional methods of design will "no longer work without understanding the outside forces that will have an impact on datacenter costs, size and longevity".

ZDNet Asia spoke to two data center players to find out what the blueprint of their next-generation data centers looks like.

For Big Blue, the next-generation data center has three primary features, said Tay. It is capable of supporting high-density computing, has the ability to optimize capital and operating cost, and boasts a flexible design that allows integration of newer technologies.

In addition, traditional datacenter considerations such as security, location and operations remain, he noted.

Over at Hewlett-Packard, the vision of the next-generation data center is "Instant-On", said Wolfgang Wittmer, senior vice president and general manager of enterprise servers, storage and networking at HP Asia-Pacific and Japan. In his e-mail, he explained that an Instant-On enterprise is one which serves customers, employees, partners and citizens with whatever they want and need instantly at any point in time and through any channel.

While Wittmer acknowledged that cloud delivery is becoming an important paradigm, he noted that multisourcing is the optimal model for businesses.

"Enterprises need to be able to choose from the full spectrum of delivery options," he said, noting that the company's hybrid delivery approach helps clients select the best method of service delivery and then integrate it into their environment.

Hewlett-Packard acquires Melodeo, a provider of cloud-based music delivery systems for mobile devices.

Hewlett-Packard (HPQ) this afternoon confirmed a TechCrunch report that it has acquired Melodeo, a provider of cloud-based music delivery systems for mobile devices.

In a statement, the company said the deal is “another example of our efforts to bring new, innovative technologies to market. We are excited about the potential of this technology to bring the power of cloud-based delivery service to millions of customers.”

The company declined to announce the terms of the deal.

Techcrunch earlier put the size of the deal at between $30 million and $35 million

(http://blogs.barrons.com/techtraderdaily/2010/06/23/hp-confirms-melodeo-deal/?mod=yahoobarrons)

Defence Signals Directorate (DSD)'s Cloud Computing Security Considerations

Australia releases cloud computing guide

Defence Signals Directorate (DSD), an Australian intelligence agency, has released an 18-page document urging government agencies in the country to carefully consider risks associated with cloud computing before adopting the technology.

The Cloud Computing Security Considerations deals with traditional cloud fears like security, location of servers and Service Level Agreement (SLA) issues. Agencies are advised to “balance the benefits of cloud computing with the security risks associated with the agency handing over control to a vendor”.

DSD treated cloud vendors with a big dose of caution in case of vendors who may “insecurely transmit, store and process the agency’s data”.

“Vendor’s responses to important security considerations must be captured in the SLA,” advised the paper. “Otherwise the customer only has vendor promises and marketing claims that can be hard to verify and may be unenforceable.”

The paper urged agencies to consider where offshore location data is stored, backed up and processed in, which country hosts the failover or redundant data centre and whether or not the vendor will notify the agency of any change in this area. Agencies were also asked to pick vendors who stored and managed data within Australia itself.

Cloud Computing Reference Architecture – Review of the Big Three

By Srinivasan Sundara Rajan

Cloud Reference Architecture

Reference Architecture (RA) provides a blueprint of a to-be-model with a well-defined scope, the requirements it satisfies, and architectural decisions it realizes. By delivering best practices in a standardized, methodical way, an RA ensures consistency and quality across development and delivery projects.

Major players in the cloud computing field are:

- Hewlett Packard

- IBM

- Microsoft (Unlike HP, IBM Microsoft's Cloud Reference Architecture is specific to HYPER-V )

The intention at this time, not to give any opinion on which is better, it is more of a understanding of their point of view.

For the rest of the article, Cloud Computing Reference Architecture is mentioned as (CCRA).

All the materials discussed in this article are taken from the publically available content (Google Search) of the respective vendors.

Service Consumer in CCRA

IBM: Cloud Service Consumer or Client is viewed as ‘In House IT' who can use an array of Cloud integration tools to get the desired business capability. This could be individuals within the enterprise too, however the ‘In house IT' could be a general collection of all of them.

HP: Cloud Service Consumer is represented in ‘Demand' layer of CCRA. However the demand layer covers more than the consumer, but also service catalog, portal access for provision of services. The service provider plays a important role in the Demand layer too, as provider is the one who makes services available as Configure to Order through an automated service system.

Microsoft: The tenant/user self-service layer provides an interface for Hyper-V cloud tenants or authorized users to request, manage, and access services, such as VMs, that are provided by the Hyper-V cloud architecture.

Service Provider in CCRA

IBM: Providers are the ones, who deliver the Cloud Services to the clients. They consist of basic models:

- Infrastructure-as-a-Service

- Platform-as-a-Service

- Software-as-a-Service

- Business-Process-as-a-Service

HP: Service Providers play their role in both ‘Demand', ‘Deliver' layers of CCRA. In the delivery layer, Service Providers intelligently orchestrates the configuration and activation of services and schedules for the provisioning of the necessary resources by aggregating data from the service configuration model.

Microsoft: Taking a service provider's perspective toward delivering infrastructure transforms the IT approach. If infrastructure is provided as a service, IT can use a shared resource model that makes it possible to achieve economies of scale. This, combined with the other principles, helps the organization to realize greater agility at lower cost. As evident this Reference Architecture concentrates more on IaaS delivery.

Service Creator in CCRA

IBM: Service creator are the ones who use service development tools to develop new cloud services. This includes both the development of runtime artifacts and management-related aspects (e.g., monitoring, metering, provisioning, etc.). "Runtime artifacts" refers to any capability needed for running what is delivered as-a-service by a cloud deployment. Examples are JEE enterprise applications, database schemas, analytics, golden master virtual machine images, etc.

HP: Service creator plays a role in ‘ Supply Layer' which functions as the dynamic resource governor that integrates a dynamic and mixed work load utility function across a heterogeneous environment with a model based orchestration system to optimize the supply of resources to fulfill the delivery of service requests. Again there are templates and tools that support the Service Creator in ‘Supply Layer'.

Microsoft: The orchestration layer must provide the ability to design, test, implement, and monitor these IT workflows. The orchestration layer is the critical interface between the IT organization and its infrastructure and transforms intent into workflow and automation.

Infrastructure in CCRA

IBM: "Infrastructure" represents all infrastructure elements needed on the cloud service provider side, which are needed to provide cloud services. This includes facilities, server, storage, and network resources, how these resources are wired up, placed within a data center, etc. The infrastructure element is purely scoped to the hardware infrastructure.

HP: "Infrastructure" layer is abstracted behind the ‘Supply Layer' and consists of power & cooling, servers , storage, network, Software, information and other external cloud.

Microsoft: "Infrastructure" layer covers, Servers, Storage, Networking, Virtualization.

Management Platform in CCRA

IBM: Cloud management platform consists of Operational Support Services (OSS) and Business Support Services (BSS).

CIO, CTO & Developer Resources

Business Support Services represents the set of business-related services exposed by the Cloud platform, which are needed by Cloud Service Creators to implement a cloud service. Some of them being:

- Customer Account Management

- Service Catalog Offering

- Pricing/Billing/Metering

- Accounts Receivable and Payable

- Provisioning

- Incident and Problem Management

- Image Life Cycle Management

- Platform and Virtualization Management

Demand Layer

- User Access Management

- Service Offer Management

- Billing and Rating

- Request Processing & Activation

- Usage Metering

- Dynamic Work Load Management

Qos (Quality of Service) in CCRA

IBM: Security, Resiliency, Performance & Consumability are cross-cutting aspects on QoS spanning the hardware infrastructure and Cloud Services. These non-functional aspects must be viewed from an end-to-end perspective including the structure of CCRA by itself, the way the hardware infrastructure is set up (e.g., in terms of isolation, network zoning setup, data center setup for disaster recovery, etc.) and how the cloud services are implemented. The major aspects of QoS being

- Threat and Vulnerability Management

- Data Protection

- Availability & Continuity Management

- Ease of doing business

- Simplified Operations

Demand Layer

- Quality Of Experience

- SLA Compliance

- Quality Of Service

- Resource Monitoring and Dynamic allocation

Summary

Availability of a Reference Architecture by the three leading industry pioneers is a good sign for the enterprises to adopt Cloud Computing based delivery model. As we have seen there is a fair amount of similarities in between which should pave the way for inter-operability and avoid vendor lock in with the cloud applications. The following diagram summarizes the common building blocks in the reference architecture published by the big 3. We also find the Microsoft Reference Architecture covers more physical aspects of a cloud platform.

References

IBM Presentation : Building Your Cloud Using IBM Cloud Computing Reference Architecture

HP Cloud Reference Architecture - HP Software Universe 2010 - Presentation on Slide Share

Microsoft Hyper-V Cloud Reference Architecture White Paper

Source: http://cloudcomputing.sys-con.com/node/1752894

Gartner - Key Issues for Cloud Computing, 2011

CloudFactory - Vmware PaaS

Platform as a Service (PaaS) is not a trivial thing to build, deploy, or maintain: first you have to orchestrate all the services internally, then you have abstract all of that work behind a facade, and finally, you have to market, sell it, and maintain it. Not surprisingly, an investment that large has been the domain of only a few well-funded companies.

Platform as a Service (PaaS) is not a trivial thing to build, deploy, or maintain: first you have to orchestrate all the services internally, then you have abstract all of that work behind a facade, and finally, you have to market, sell it, and maintain it. Not surprisingly, an investment that large has been the domain of only a few well-funded companies.

Hence, it has been interesting to watch VMware roll out their CloudFoundry service as an open source project! An entire PaaS platform, which they will also offer as a hosted service, but also available for anyone to run within their own company or datacenter - you can now run a "mini Heroku", or an "EngineYard cloud" on your own servers! But marketing aside, the engineering behind the project is also very interesting: it is orchestrated entirely in Ruby! No Erlang, no JVM's, all Ruby under the hood.

CloudFoundry: Rails, Sinatra, EventMachine

Out of the gate, CloudFoundry is able to provision and run multiple platforms and frameworks (Rails, Sinatra, Grails, node.js), as well as provision and support multiple supporting services (MySQL, Redis, RabbitMQ). In other words, the system is modular and is fairly simple to extend. For a great technical overview, checkout the webinar.

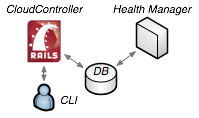

To orchestrate all of these moving components, the "brains" of the platform is a Rails 3 application (CloudController) whose role is to store the information about all users, provisioned apps, services, and maintain the state of each component. When you run your CLI (command line client) on a local machine, you are, in fact, talking to the CloudController. Interestingly, the Rails app itself is designed to run on top of the Thin web-server, and is using Ruby 1.9 fibers and async DB drivers - in other words, async Rails 3!

To orchestrate all of these moving components, the "brains" of the platform is a Rails 3 application (CloudController) whose role is to store the information about all users, provisioned apps, services, and maintain the state of each component. When you run your CLI (command line client) on a local machine, you are, in fact, talking to the CloudController. Interestingly, the Rails app itself is designed to run on top of the Thin web-server, and is using Ruby 1.9 fibers and async DB drivers - in other words, async Rails 3!

A companion to the Rails application is the Health Manager, which is a standalone daemon, which imports all of the CloudController ActiveRecord models, and actively compares to what is in the database against all the chatter between the remaining daemons. When a discrepancy is detected, it notifies the CloudController - simple and an effective way to keep all the distributed state information up to date.

Orchestrating the CloudFoundry Platform

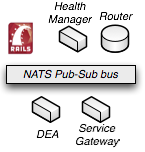

The remainder of the CloudFoundry platform follows a consistent pattern: each service is a Ruby daemon which queries the CloudController when it first boots, subscribes to and publishes to a shared message bus, and also exposes several JSON endpoints for providing health and status information. Not surprisingly, all of the daemons are also powered by Ruby EventMachine under the hood, and hence use Thin and simple Rack endpoints.

The router is responsible for parsing incoming requests and redirecting the traffic to one of the provisioned applications (droplets). To do so, it maintains an internal map of registered URL's and provisioned applications responsible for each. When you provision or decommission a new app server instance, the router table is updated, and the traffic is redirected accordingly. For small deployments, one router will suffice, and in larger deployments, traffic can be load-balanced between multiple routers.

The router is responsible for parsing incoming requests and redirecting the traffic to one of the provisioned applications (droplets). To do so, it maintains an internal map of registered URL's and provisioned applications responsible for each. When you provision or decommission a new app server instance, the router table is updated, and the traffic is redirected accordingly. For small deployments, one router will suffice, and in larger deployments, traffic can be load-balanced between multiple routers.

The DEA (Droplet Execution Agent) is the supervisor process responsible for provisioning new applications: it receives the query from the CloudController, sets up the appropriate platform, exports the environment variables, and launches the app server.

Finally, the services component is responsible for provisioning and managing access to resources such as MySQL, Redis, RabbitMQ, and others. Once again, very similar architecture: a gateway Ruby daemon listens to incoming requests and invokes the required start/stop and add/remove user commands. Adding a new or a custom service is as simple as implementing a custom Provisioner class.

Connecting the pieces with NATS

Each of the Ruby daemons above follows a similar pattern: on load, query the CloudController, and also expose local HTTP endpoints to provide health and status information about its own status. But how do these services communicate between each other? Well, through another Ruby-powered service, of course! NATS publish-subscribe message system is a lightweight topic router (powered by EventMachine) which connects all the pieces! When each daemon first boots, it connects to the NATS message bus, subscribes to topics it cares about (ex: provision and heartbeat signals), and also begins to publish its own heartbeats and notifications.

Each of the Ruby daemons above follows a similar pattern: on load, query the CloudController, and also expose local HTTP endpoints to provide health and status information about its own status. But how do these services communicate between each other? Well, through another Ruby-powered service, of course! NATS publish-subscribe message system is a lightweight topic router (powered by EventMachine) which connects all the pieces! When each daemon first boots, it connects to the NATS message bus, subscribes to topics it cares about (ex: provision and heartbeat signals), and also begins to publish its own heartbeats and notifications.

This architecture allows CloudFoundry to easily add and remove new routers, DEA agents, service controllers and so on. Nothing stops you from running all of the above on a single machine, or across a large cluster of servers within your own datacenter.

Distributed Systems with Ruby? Yes!

Building a distributed system with as many moving components as CloudFoundry is no small feat, and it is really interesting to see that the team behind it chose Ruby as the platform of choice. If you look under the hood, you will find Rails, Sinatra, Rack, and a lot of EventMachine code. If you ever wondered if Ruby is a viable platform to build a non-trivial distributed system, then this is great case study and a vote of confidence by VMware. Definitely worth a read through the source!

The beginning of the smartphone-as-PC

Master Talking Points and FAQ

Citrix announces Citrix Receiver™ for webOS

Summary

HP hosted an analyst day to discuss the company’s overall vision and strategy. A key part of this update focused on the forthcoming HP webOS Operating System support on new tablets, and smartphones. HP also updated the analyst community on future plans to extend the webOS operating system into other HP platforms including PCs and laptops. HP is the worldwide market share leader in PCs and laptops primarily supporting Microsoft Windows.

Citrix was featured at the HP analyst event to demonstrate and preview Citrix Receiver on new webOS-based devices, including the HP TouchPad. Citrix was the only enterprise partner featured on stage with a live demo on the TouchPad at the event. Citrix Receiver support for webOS is planned to be made available concurrent with the shipping of the HP TouchPad in late Q2 2011.

Key Talking Points

· Citrix Receiver makes webOS devices enterprise ready at launch by providing webOS devices secure and easy access to over 500,000 enterprise applications on Day 1 of product availability.

· Citrix Receiver for webOS will be made available as a free user download on Day 1 of launch of the new HP TouchPad.

· By supporting webOS, Citrix Receiver provides a win-win for both webOS users and enterprise customers:

o Citrix allows IT organizations to embrace consumerization of IT trends that are driving new device adoption in the workplace – saving money, time, and gaining flexibility. Virtual computing technologies from Citrix enable the secure delivery of enterprise Windows, web, and SaaS applications to any user on any device. Using Citrix, IT can let users choose the devices that best support their workstyle, ultimately driving satisfaction and productivity without sacrificing security or services management.

o Traditional enterprise apps and data were never built for the kind of flexibility and security challenges this kind of user choice and mobility introduces. Many enterprises are now struggling to embrace the “consumerization of IT” reality.

o With Citrix Receiver, all data and applications are centrally managed in the data center giving the IT organization complete visibility and control over access to company applications, IP and data. Giving IT organizations the “Power to say Yes” to enabling the latest webOS devices across the enterprise.

· At the HP Analyst event on March 15, Citrix demonstrated an early version of our HTML5-based Receiver which will support webOS, showcasing the HP TouchPad accessing enterprise Windows based applications securely with a high definition HDX experience. Citrix was the only enterprise partner featured by HP on stage with the TouchPad demo.

· Citrix Receiver for webOS is expected to be available in June 2011, concurrent with the availability of the new HP TouchPad. Users will be able to download Receiver for webOS free from either citrix.com or the webOS App Catalog.

· Citrix Receiver for webOS extends the Citrix commitment to have a Receiver available for virtually every computing device – Windows PCs/laptops, Macs, iPhones, iPads, Android smartphones/tablets, Blackberry, and Windows Mobile devices – as part of the “Citrix Receiver Everywhere” strategy.

Frequently Asked Questions

1. What is Citrix Receiver?

Citrix Receiver is a lightweight, universal client technology that enables IT to deliver virtual desktops, Windows, web and SaaS applications and content as an on-demand service to any user or device. With Citrix Receiver, IT has complete control over security, performance, and user experience, with no need to own or manage the physical device or its location.

2. What did Citrix announce at the HP Analyst event on March 15?

Citrix demonstrated Receiver for webOS on stage at the HP Analyst Day event on March 15th. Citrix Receiver for webOS will provide easy and secure access for users to their Enterprise applications and desktops. With Citrix Receiver for webOS, users can access any enterprise app via their enterprise app store, in addition to their personal apps made available from the webOS App Catalog.

3. What is the benefit of Citrix Receiver for customers (End users and enterprise)?

· Simple, self-service access to virtual desktops, apps & IT services from any device (PCs/Macs, netbooks, tablets and smartphones)

· Hi-definition user experience on any network or device

· Simple, intuitive user interface, consistent across different devices

· Secure data and communications from the data center to the device

· Instant updates to users with complete IT control and visibility

· IT enterprises can now say yes to enabling new generation of mobile devices brought into the workplace

4. What devices will be enabled for Receiver for webOS?

Citrix Receiver for webOS will be made available for use with the HP TouchPad on Day 1 of device availability. Through its partnership with HP, Citrix expects Receiver for webOS to work on Day 1 on future HP webOS-based device releases. Citrix Receiver will also be enabled for webOS smartphone devices such as the HP Veer and HP Pre 2. Beyond the TouchPad, Citrix expects to support all major webOS device releases into the future.

5. Who are the target customers (possible business and use cases)?

Citrix Receiver is ideal for anyone who needs to work on-the-go and needs seamless access to their business desktop, applications and documents. Receiver delivers a high performance user experience and a consistent look and feel across virtually any device, allowing mobile workers to be as productive as possible while in the office, at a customer meeting or in transit.

6. What can I expect from Citrix Receiver on webOS?

Citrix Receiver will be optimized for usage on webOS devices. Receiver allows the user to take advantage of the larger screen size of the TouchPad device in addition to device capabilities such as multitasking and scrolling. Receiver touch enables Windows applications and even legacy apps that were never designed to be touch capable. Receiver will provide users a consistent experience across any mobile device – whether running webOS such as a TouchPad – or devices from Blackberry or those running the latest Android or iOS operating systems.

7. How will Citrix Receiver for webOS be made available?

Citrix Receiver for webOS is expected to be made available as a free and simple download from both citrix.com and the webOS App Catalog. Once “added” to the device, instant, secure and self-service access to enterprise apps and desktops is enabled with a single touch interface.

8. Is a Citrix environment needed to run Citrix Receiver for webOS?

While Citrix Receiver for webOS will be available as a free download for webOS device owners, users should contact their IT administrator or Help Desk to verify that the organization is running a Citrix environment. In addition their workplace IT administrators can provide instructions on how to enable their devices to gain access to the corporate network and application infrastructure. Citrix XenDesktop or XenApp servers centrally manage corporate applications and with Citrix Receiver downloaded on a webOS device, users will be able to securely and instantly access corporate applications, full virtual desktops and IT services that are made available by the enterprise.

A demo of Citrix Receiver and the power of XenDesktop infrastructure is available to all Citrix Receiver end users through http://citrixcloud.net .

9. Why did Citrix participate in the HP Analyst Day?

Citrix, as the leader in providing secure access to virtual apps and desktops, was the only enterprise application vendor that was invited by HP to participate on stage with the TouchPad at the HP Analyst event. At the event, Citrix Receiver demonstrated how it will enable the TouchPad to be enterprise ready with access to 500,000+ business applications on day one. “Receiver Everywhere” is a top strategic imperative for Citrix and Receiver for webOS is another key milestone in the ongoing Citrix commitment of enabling business applications on the latest and most popular mobile devices and platforms in the market.

10. What does this announcement mean to the HP – Citrix relationship?

The announcement signals a growing partnership between HP and Citrix. Customers have told us they believe consumer devices that can be used for BOTH personal and enterprise computing, such as the HP TouchPad are highly preferred. By partnering with HP, Citrix allows any webOS based device to be effective for enterprise computing when connected to the Internet or Enterprise networks. Our partnership is not exclusive although we are the acknowledged leader with our commitment to enable Receiver on every device. HP’s announcement and inclusion of Citrix today clearly demonstrates Citrix leadership in delivering secure access to Enterprise IT services to any webOS based device, anywhere.

11. How does Receiver for webOS fit in with HP’s broader converged infrastructure and instant on enterprise vision?

The close partnership between HP and Citrix to enable webOS for the TouchPad is an important extension of our Receiver Everywhere strategy. HP’s ISS and Blade Servers power over 50% of Citrix customer deployments. Enabling Receiver for webOS allows HP customers to enjoy a seamless experience on HP endpoint devices such as the TouchPad, by leveraging their Citrix XenDesktop infrastructure, optimized to run in HP Converged Infrastructure environments.

12. Does Citrix Receiver support additional mobile platforms?

Citrix Receiver supports a variety of platforms and devices, including PCs, Macs, Netbooks, Smartphones and Tablets. Receiver is offered as a free download with each of the leading mobile platforms on the market in addition to versions for PCs, Macs, Linux and Java.

13. How many users are utilizing Citrix Receiver and Citrix technology?

Since early 2010, Citrix Receiver has nearly two million downloads on both smartphone and tablet devices. More than 230,000 organizations worldwide use Citrix virtual computing infrastructure every day to deliver virtual desktops and apps to their employees.

14. What makes Citrix Receiver unique?

Citrix is the ONLY vendor that can deliver secure enterprise applications -- Windows, web or SaaS -- to the vast majority of devices and platforms – Windows PCs/laptops, Macs, iPhones, iPads, Android smartphones/tablets, Blackberry, webOS and Windows Mobile – under our “Citrix Receiver Everywhere” strategy/commitment.

15. How does Citrix Receiver compare to other solutions in the market?

Citrix Receiver allows end users and customers to leverage their Citrix environment by allowing for the secure delivery of business, web and SaaS applications to any device. By supporting PCs, Macs, Linux, Java and the most popular mobile device platforms, no other comparable product to Citrix Receiver exists in the market today.

16. Where can I get more information about Citrix Receiver?

More information on Citrix Receiver can be found at http://www.citrix.com/receiver.

Top 9 Benefits of Cloud Computing

Dave Nielsen and Jim O' Neil talk about the benefits of using cloud computing for start up companies

TO see the videos Go here http://www.codeproject.com/KB/showcase/Dave-Nielsen-Azure.aspx

Dell's $1B bet on Cloud Solutions for the Virtual Era

Dell has changed a great deal over the past several years including a significant change in the attitude towards the development and ownership of intellectual property as the company transitions from a product led business approach to a strategy organized around solutions and services.

At last summer's Financial Analyst Meeting, the company detailed plans to ‘double the size’ of their $16 billion dollar server and storage enterprise technology and services business over the next three years.

ewlett-Packard has ambitions to control the technology 'stack' and exploit the cloud market

Hewlett-Packard (HP) hosted industry analysts in San Francisco on 15 and 16 March 2011.

The event included top-level management executives from HP as well as the first public appearance of newly appointed CEO and President, Léo Apotheker.

Apotheker outlined his vision of “controlling the entire stack” and becoming a leading supplier in cloud computing – a business that generated more than USD1 billion last year.

Best Practices in Data Center and Server Consolidation

Advances in technology have made second- and third-wave consolidation projects now viable

The economy has put added pressure on many organizations to utilize the capacity they have in place more efficiently, whether it is the physical data centers they have or the servers that reside within them.

As such, even though many organizations have past experience with consolidation projects, they are finding they are under increased pressure to optimize their costs and possibly further consolidate.

The good news is that advances in technology have made second- and third-wave consolidation projects now viable. We will look at some key trigger points in deciding when to consolidate, best practices in how to get started and recommendations for each phase of a consolidation project, including best practices in relocation or closure of an existing data center.

Global Information Technology Report 2010-2011

Global Information Technology Report 2010-2011

Sweden and Singapore continue to top the rankings of The Global Information Technology Report 2010-2011, Transformations 2.0, released by the World Economic Forum, confirming the leadership of the Nordic countries and the Asian Tiger economies in adopting and implementing ICT advances for increased growth and development. Finland jumps to third place, while Switzerland and the United States are steady in fourth and fifth place respectively. The 10th anniversary edition of the report focuses on ICT’s power to transform society in the next decade through modernization and innovation.

The Nordic countries lead the way in leveraging ICT. With Denmark in 7th and Norway in 9th place, all are in the top 10, except for Iceland, which is ranked in 16th position. Led by Singapore in second place, the other Asian Tiger economies continue to make progress in the ranking, with both Taiwan, China and Korea improving five places to 6th and 10th respectively, and Hong Kong SAR following closely at 12th.

With a record coverage of 138 economies worldwide, the report remains the world’s most comprehensive and authoritative international assessment of the impact of ICT on the development process and the competitiveness of nations. The Networked Readiness Index (NRI) featured in the report examines how prepared countries are to use ICT effectively on three dimensions: the general business, regulatory and infrastructure environment for ICT; the readiness of the three key societal actors ? individuals, businesses and governments ? to use and benefit from ICT; and their actual usage of available ICT.

Under the theme Transformations 2.0, this 10th anniversary edition explores the coming transformations powered by ICT, with a focus on the impact they will have on individuals, businesses and governments over the next few years.

http://www.weforum.org/reports/global-information-technology-report-2010-2011-0

http://www3.weforum.org/docs/WEF_GITR_Report_2011.pdf [9.95MB]

Cisco throws networking into OpenStack cloud

by Dave Rosenberg

(Credit: Cisco/OpenStack)

Cisco Systems, one of the world's largest technology companies, signaled last week that it has more than a passing interest in cloud infrastructure by submitting a design for OpenStack: Network as a Service (NaaS).

Cisco's proposal is not the first for NaaS, but I believe it signifies an important acceptance not just of a change to the way that we consume compute and networking but a shift in how big companies will make OpenStack both the literal and metaphorical Apache Web server for cloud services.

The networking component of both public and private clouds has been woefully underserved, either because big networking vendors see it as a risk--after all, they can't easily monetize a virtual network the way they could a physical one--or because the pace and mores of open-source development don't fit with their own development models.

The OpenStack: NaaS proposal provides a network abstraction layer and set of APIs to enable a broader set of services and APIs than is currently available.

- Requesting and acquiring network connectivity by OpenStack:Compute for interconnecting two VM instances, both single virtual network (single vnic) or multi vnics to different virtual networks.

- Network Services (e.g. firewall, load balancers, Wide Area Acceleration Services) insertion at the appropriate virtual networks; and dynamically request "adaptive" network resources.

- Monitoring and management or resources that require visibility or consumption, such as: disaster recovery, network health, chargeback /billing services etc.

- A wealth of new networking capabilities, including the potential for new services such as SLA management.

OpenStack has enjoyed some substantial success as well as one notable disgruntled community member thus far--very common to important open-source projects. But the strength of a project is demonstrated by its community, which to become mainstream requires adoption and participation from not just individuals but from companies that see the project as an important part of future infrastructure. We saw this happen with Apache and Linux, and I believe we'll see it with OpenStack as well.

The Evolution of Cloud Computing Into an Essential IT Strategy

Cloud computing is moving from a new idea to the next big strategy for optimizing how IT is used. We'll establish the reality of cloud computing for enterprises and consumers, and examine the maturity of cloud computing, its direction and the markets it affects.

IBM to battle Amazon in the public cloud

IBM has opened the door on a public cloud Infrastructure as a Service offering for the enterprise.

The company has quietly added the Smart Business Cloud - Enterprise to its Smart Business Cloud

product line. SBC - Enterprise is a pay-as-you-go, self service (for registered IBM customers) online

platform that can run virtual machines (VMs) in a variety of formats, along with other services from

IBM.

IBM stepped into the cloud early, but the market has been very dynamic the past two

years.

Dana Gardner, principal analyst with Interarbor Solutions

The Smart Business Cloud - Enterprise is a big step up from IBM's previous pure Infrastructure as a

Service (IaaS) offering, the Smart Business Development and Test Cloud. Users can provision stock

VMs running Red Hat Enterprise Linux, SUSE Linux Enterprise Server and Microsoft Windows

Server, or they can choose from an arsenal of preconfigured software appliances that IBM, in part,

manages and that users consume. These include Industry Application Platform, IBM DB2, Informix,

Lotus Domino Enterprise Server, Rational Asset Manager, Tivoli Monitoring, WebSphere

Application Server, Cognos Business Intelligence and many others.

In what may tell the back story of IBM's own cloud computing development path, all of the images

and applications being offered from SBC - Enterprise presently run in Amazon's Elastic Compute

Cloud environment. Not all of the announced IBM cloud applications run in the IBM compute

environment.

Screenshots and a video demo show a familiar sight by now for cloud users: a point-and-click

provisioning system in a Web interface. Apparently sensitive to its enterprise audience, IBM has

taken some pains to offer higher-end features, including access and identity management control,

security and application monitoring tools, and VPN and VLAN capabilities, as well as VM isolation.

IBM technical support will also be available.

IBM has been a consistent supporter of open source software for the enterprise market and the

inclusion of Red Hat Enterprise Linux (RHEL) and SUSE Linux are indications that IBM feels those

products have the chops for big customers running Linux. Being based on Linux (Blue Insight) also

makes it easy for IBM to port its technology into an Amazon-type network.

Analyzing IBM's entrance into public cloud

Some industry observers say they believe the cloud-based product disclosure is overdue, and a step

in the right direction. The move is a natural one if IBM wants to extend the lofty position it currently

holds in on-premises application integration.

"IBM needs to start talking about integration as a service (in the cloud)," said Dana Gardner,